Why Jarvis Can't Come Soon Enough?

Why Trust, Not Technology, is the Real Barrier to Our Jarvis Future...

Remember how Tony Stark interacts with Jarvis in Iron Man? He trusts Jarvis completely—with his schedule, his personal safety, and even his billion-dollar Iron Man suits. It's cool, it's efficient, and deep down, we all want a Jarvis. But the wierd part is—we've actually built technology that’s pretty close to Jarvis in many ways. Yet, most of us won't even let an AI book a dinner reservation without second-guessing it.

So why is that?

It's simple: the real barrier isn’t technology—it's trust.

Tech is Ready, We Aren’t.

AI has come a long way. Today, tools like GPT-4, Gemini, and Claude can do things we'd never have imagined a few years ago. They can summarize your emails, set your meetings, write your proposals, and even create entire websites. Basically, they can already handle much of what Jarvis did for Tony Stark.

But even with these powerful capabilities, we still hesitate. We keep AI at arm's length, carefully limiting its access and double-checking everything it does. The problem? We're just not comfortable yet.

We have trust issues.

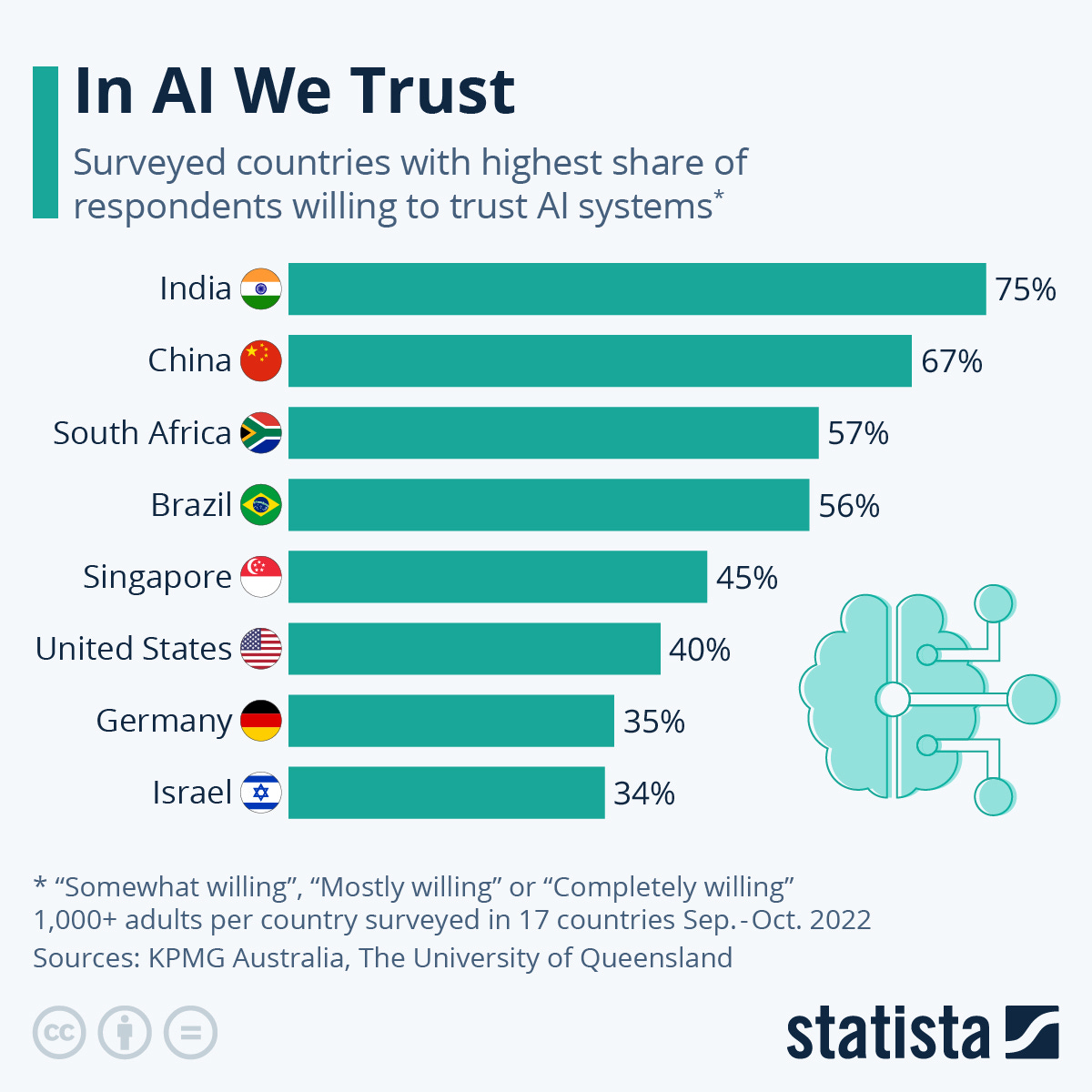

Humans have trust issues—especially with technology. Think about it:

Personal apps and messages: You don’t fully trust an AI to read your emails and make decisions for you. It's like handing over your phone unlocked to a stranger—feels weird, right?

Professional tasks: Companies are often reluctant to give AI access to sensitive data like confidential client communications or financial reports. The tech could handle it, sure, but trusting it fully feels risky.

We tend to imagine worst-case scenarios instead of focusing on how convenient life could become. Call it human nature, or call it good old-fashioned caution—we just don't want things going wrong.

Psychology Behind the Trust Gap

Let's unpack why we feel this way:

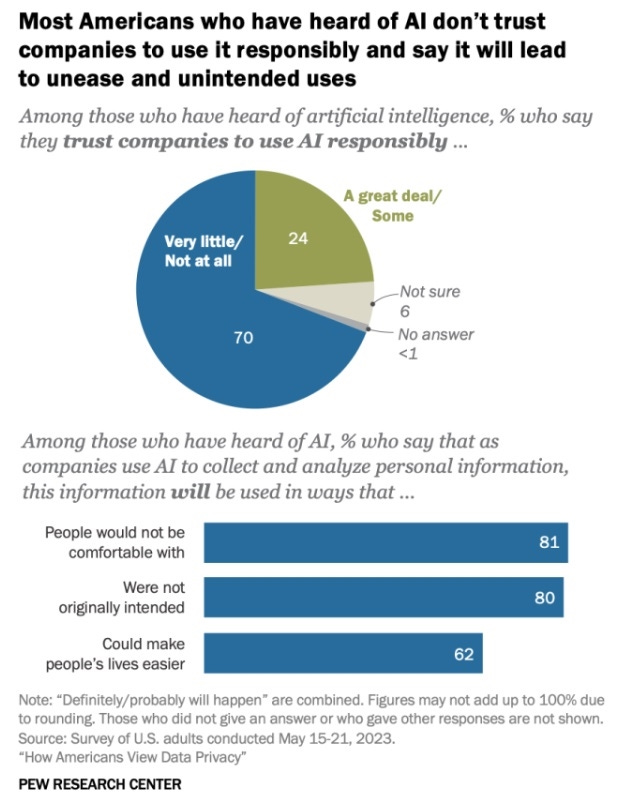

Fear of loss: We worry about what we might lose (privacy, control, security) more than what we might gain (time, convenience, efficiency).

Privacy paradox: We want the ease of AI helping us with everything, but we're not ready to sacrifice our privacy. We’re stuck between convenience and caution.

Here's a familiar scenario: Self-driving cars. The technology is impressive, yet most people still prefer to drive themselves. It's the same reason many people don't fully trust AI assistants—they prefer keeping control, even if it’s less efficient.

What Will it Take to Trust AI?

Trust isn't built overnight—it needs:

Transparency: If an AI clearly tells you what data it’s accessing and why, you're likely to trust it more.

Control: You need the ability to set clear limits on what AI can and can't do.

Accountability: Knowing exactly who is responsible if something goes wrong helps build trust.

Think about banks—why do we trust banks with our money? Because they've had decades to prove they can be trusted (most of the time). AI needs that same gradual trust-building period.

Building a Future with Jarvis

Here's the good news: we're already making progress. Companies are starting to emphasize privacy and transparency. Apple proudly shows off its privacy-first approach, and Google openly shares how your data is used. These steps build the foundations of trust.

So, when will we see a real-world Jarvis?

Not when AI gets smarter—it's already pretty smart—but when we become comfortable enough to trust it completely.

- -

Jarvis isn't stuck because AI isn’t good enough yet. It’s waiting on us. It’s about us getting comfortable enough to let AI into our lives without feeling anxious about it.

Honestly, it can't come soon enough.